Steph W. from SEOPressor

...help you check your website and tell you exactly how to rank higher?

97

score %

SEO Score

Found us from search engine?

We rank high, you can too.

SEOPressor helps you to optimize your on-page SEO for higher & improved search ranking.

By vivian on November 23, 2019

The start of November is all about speed. If you haven’t, check out the new speed report on your site’s search console page.

Google’s Webmaster Conference Mountain View: Product Summit 2019 (gwcps) also happened, and we will have a post coming up real soon, so stay tuned and enjoy this week’s SEO news update.

Google has repeatedly stressed the importance of speed and the Speed Report last shown at Google I/O 2019 is finally going live to all Search Console users.

We’re excited to begin the public rollout for the Search Console Speed report 💨! Let’s make the web faster together 💪 https://t.co/LWITJnnTVq pic.twitter.com/45vwLXYj4m

— Google Webmasters (@googlewmc) November 4, 2019

Drawing data from the Chrome User Experience Report, the speed report will automatically group URLs into “Fast”, “Moderate”, and “Slow” buckets.

Though launched, the report is still classified as “experimental” and webmasters are welcome to submit feedback to help make the tool better.

Here are a few prominent SEOs in the industry sharing their opinion and excitement on this new report.

Checking out already the new speed report in the Search Console 😍👌 Request: It would be nice to also have there the main issues affecting the pages speed (without having to go to pagespeed insights) cc @JohnMu pic.twitter.com/PtfNZxdjZJ

— Aleyda Solis (@aleyda) November 4, 2019

Great news: page speed reporting available in GSC!

“To help site owners, the Speed report automatically assigns groups of similar URLs into “Fast”, “Moderate”, and “Slow” buckets.” https://t.co/QNLWBvz3Dy

— Lily Ray (@lilyraynyc) November 4, 2019

Early thoughts on the new Speed report in Google Search Console: the primary impact I see so far is that it elevates the discussions about page load times to a higher authority level. Makes it easier to get buy-in to optimise for speed. And that’s a Very Good Thing.

— Barry Adams (@badams) November 5, 2019

Martin Splitt from Google announced the Chrome Dev Summit 2019 that the median time for GoogleBot to render is 5 seconds.

Even the 90 percentile is done in minutes, he said adding that you now don’t have to worry about week-long delays for rendering after GoogleBot crawls your page.

All of us know the importance of HTTPS, right? Pretty sure most of you here have moved to HTTPS as well and upon moving, we’d expect to see a gray lock beside the URL.

However, there are cases where webmasters do not see it. Why? It means that there’s mixed content.

Mixed content is when an HTTPS page includes an HTTP content; images, videos, and scripts are loaded over an insecure HTTP connection. Google is changing the way it handles mixed content. Google mentioned that upcoming change may break the page for users and Googlebot.

To make things right, all you need to do is move all your site resources to HTTPS. If you’re unsure which resources is it, you may use Chrome’s Developer Tools to find out more.

Ending this with a quote from Google: “No need to be insecure if your website is secure! #happyemoji”.

Read full guide here: What is Mixed Content?

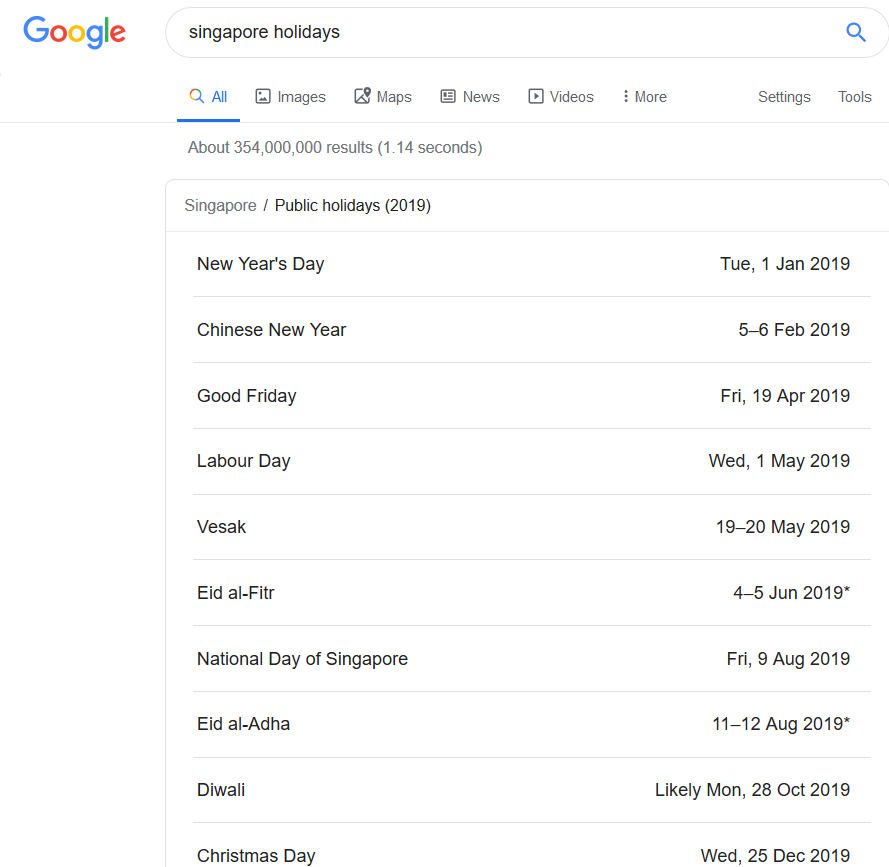

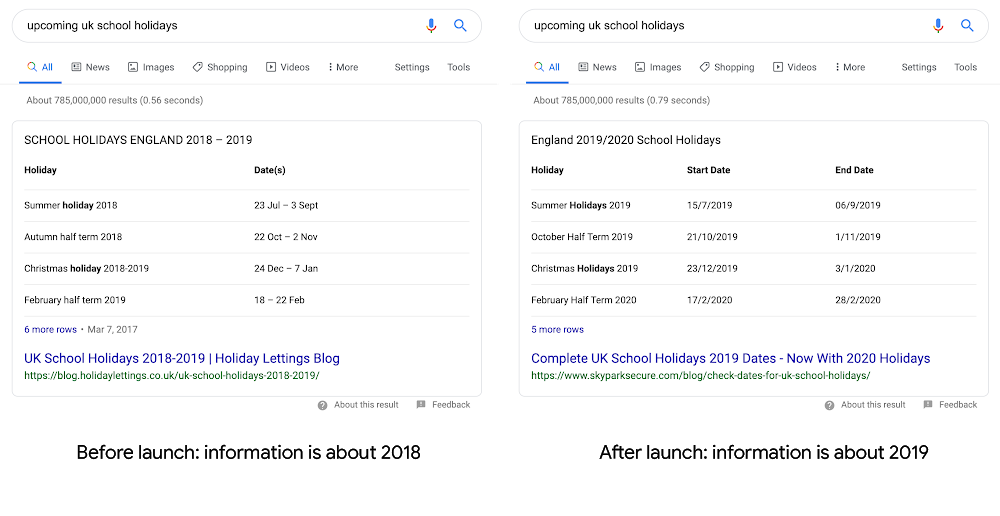

Valentin Pletzer tweeted a new feature on Google, instead of returning a series of results or a featured snippet, Google can now provide instant answer for holiday searches – as reported by Barry Schwartz.

We tried to duplicate it, and indeed, it’s showing an expandable Google native listing of the holidays according to the area you searched for.

And this is how holiday queries will return when you search for it a few months back for comparison. (credit to Barry)

Kieron Hughes on Twitter said that he noticed Google has been testing with the SERP results where they have been removing a site’s normal snippet when Google displays that URL for the featured snippet.

Example: Legal & General in the featured snippet, and their actual ranking URL has been removed from the SERP entirely pic.twitter.com/Zy17NazLkf

— Kieron Hughes (@kieronhughes) November 12, 2019

What does this mean?

If you already have a URL in the featured snippet at the top of the page, it would make sense not to repeat it again in the core 10 web results.

Without a second listing on the page, this may mean an impact on click-through rate and traffic. Google has also previously tested this in 2017.

There’s a new stat in Google Search Console! However, it’s only for those who have products and uses product rich results on Google Search.

Website owners are now able to get data for impressions and clicks of their rich result. Ultimately, it helps webmasters to understand and optimize the performance of their website results on Google Search. The metrics can be further segmented by device, geography, and queries.

If your website is elligible to appear on Product search results, you’ll see a new Search Appearance type called “Product results”.

If you don’t see it, refer to Google’s markup guide for products: Product. It teaches you how to mark up your product information so Google can display rich results.

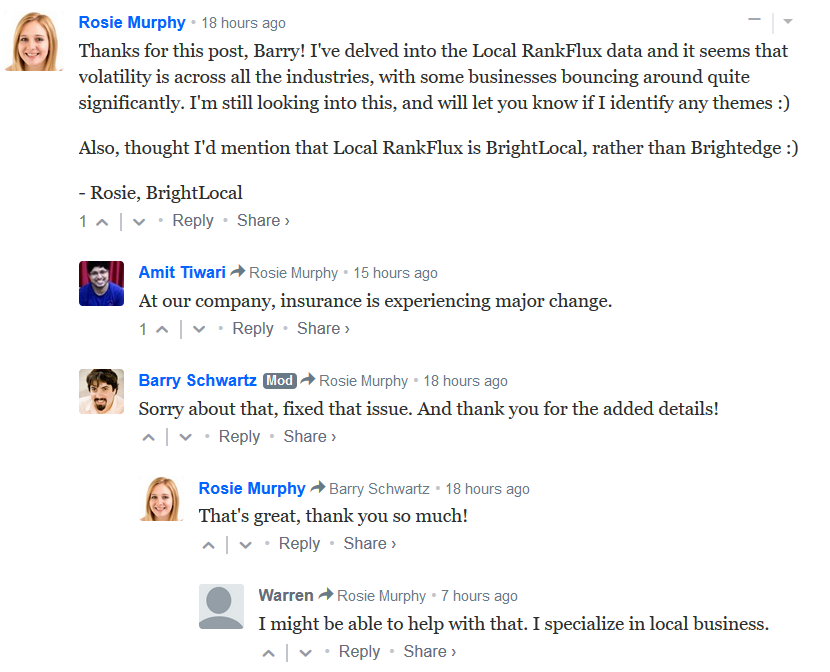

Barry Schwartz noticed chatter on both Twitter and the Local Search Forum, and after his post, there’s also a flood of comments reporting the same thing to their listing.

Rosie Murphy from BrightLocal also chimed in to provide more details.

According to their tool, Local RankFlux, there is volatility across all industries.

No official updates from Google so far.

Frédéric Dubut of Bing announces a new spam penalty against “inorganic site structure”.

Those affected include: PBN and link networks, doorways and duplicate content, subdomain or subfolder leasing, and spammy web hosts and hacked sites.

Which are all common techniques used by black hat SEO to gain authority and “link juice”. Bing also published a helpful post outlining how these practices usually works.

Those who believe they are unfairly penalized can file a reconsideration request via Bing Webmaster Support.

Martin Splitt from Google tweeted that he and Lizzi Harvey have worked on extending the JS guides to include web components.

Here’s the link to the documents

https://developers.google.com/search/docs/guides/javascript-seo-basics#web-components

https://developers.google.com/search/docs/guides/fix-search-javascript

There’s a big number of SEOs out there, including yours humbly, who believes that user behavior like dwell time, bounce rate and exit rate can affect your page rankings.

Though Googlers’ usual stance on this issue is negative.

The friendly Googler Martin Splitt most well known for his JavaScript SEO series (and can usually be spotted with his fabulous pastel coloured hairstyle) chimed in on this matter.

He was asked “Does Google (Search Algorithm) use UX (such as dwelling time/time on page, etc.) as a ranking factor? If so, how Google get the data? Does it use Google Analytics data or something else?”

The answer? “We’re not using such metrics.”

We’re not using such metrics.

— Martin Splitt @ 🔜🇨🇭🏡 (@g33konaut) November 6, 2019

Do you think otherwise? Let us know, we’re curious.

Updated: 29 June 2025

Save thousands of dollars (it’s 100x cheaper)

Zero risk of Google penalty (it’s Google-approved)

Boost your rankings (proven by case studies)

Rank High With This Link Strategy

Precise, Simplified, Fast Internal Linking.