Steph W. from SEOPressor

...help you check your website and tell you exactly how to rank higher?

97

score %

SEO Score

Found us from search engine?

We rank high, you can too.

SEOPressor helps you to optimize your on-page SEO for higher & improved search ranking.

By winniewong on July 9, 2016

If it seems as though the Internet is becoming sluggish to browse, you’re not alone. Websites today are becoming increasingly cluttered and slow-to-load, and that’s big problem. It’s a problem for users, and it’s a problem for the web page owners themselves.

A website should be as fast-loading as humanly possible. If your own page is similarly becoming slow to download and display, it’s probably time for a round of revisions to help optimize it again. First, though, let’s take a look at why this is such a problem that it needs to be rapidly addressed.

There are several inter-connected issues here that all have to do with slow-loading or bandwidth-heavy pages. These are some of the highlights:

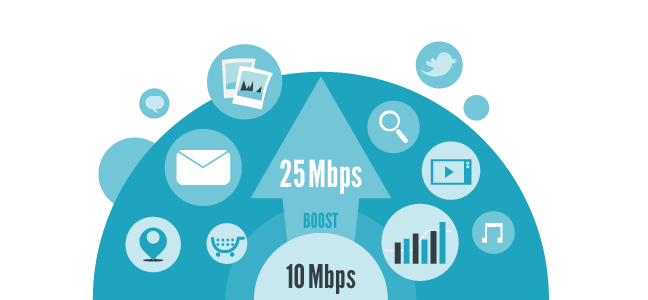

As of mid-2015, there were officially more mobile web searches than those on desktops. While most home internet connections have (nearly) unlimited bandwidth, the same is not true for mobile devices using 3G/4G connections. A bandwidth-heavy site, especially one which utilizes auto-playing video or audio, could literally be costing every visitor money.

As such, mobile users are very aware of their bandwidth caps and will actively avoid pages they know to be data hogs.

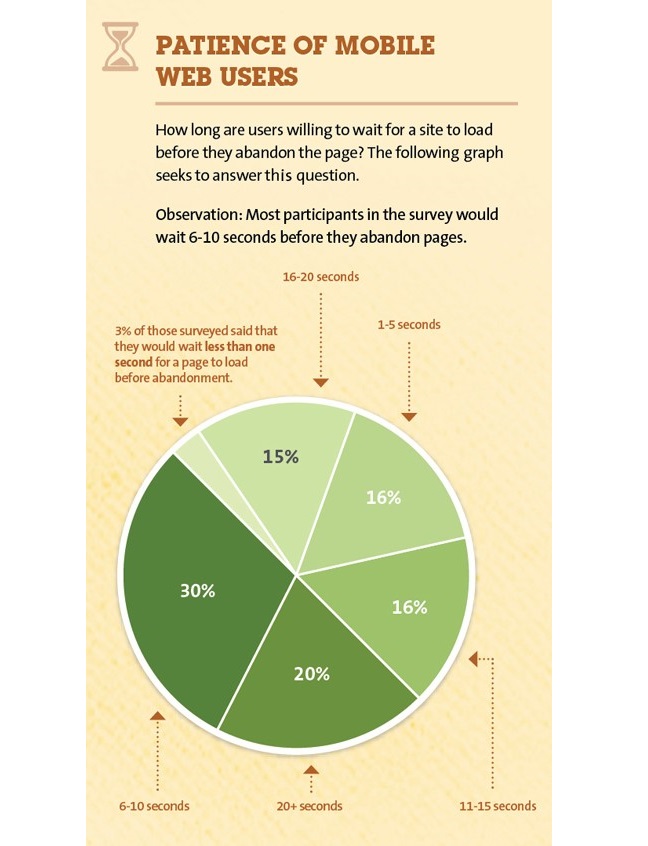

According to the experts at KissMetrics, the optimal load time for a website is around 3 seconds. Why? Because after that, users will start abandoning the page quickly.

Even a 1-second delay in page loading can cost an online retailer approximately 7% of sales, and of course, those losses grow worse as the seconds tick past.

Online users have very short attention spans and a high desire for instant gratification. With literally billions of pages online, if they can’t load Site A, they’ll start looking at Sites B or C almost immediately.

Google plays a role here too. As far back as 2010, they were concerned about page speed increases. As such, they began incorporating load times into their User Experience (UX) evaluations of websites, penalizing those which take too long to load.

So besides annoying customers and driving them away, excessive page load speeds can ultimately hurt your search ranking replacement as well. Google will give preference to quick-loading, low-bandwidth pages, especially in the mobile sphere.

Plus, at the most basic level: A smooth, fast-loading page will stand out among the competition. This is one of the aspects of webs design which is quite noticeable to users, and they’ll quickly recognize which pages are legitimately nice to use.

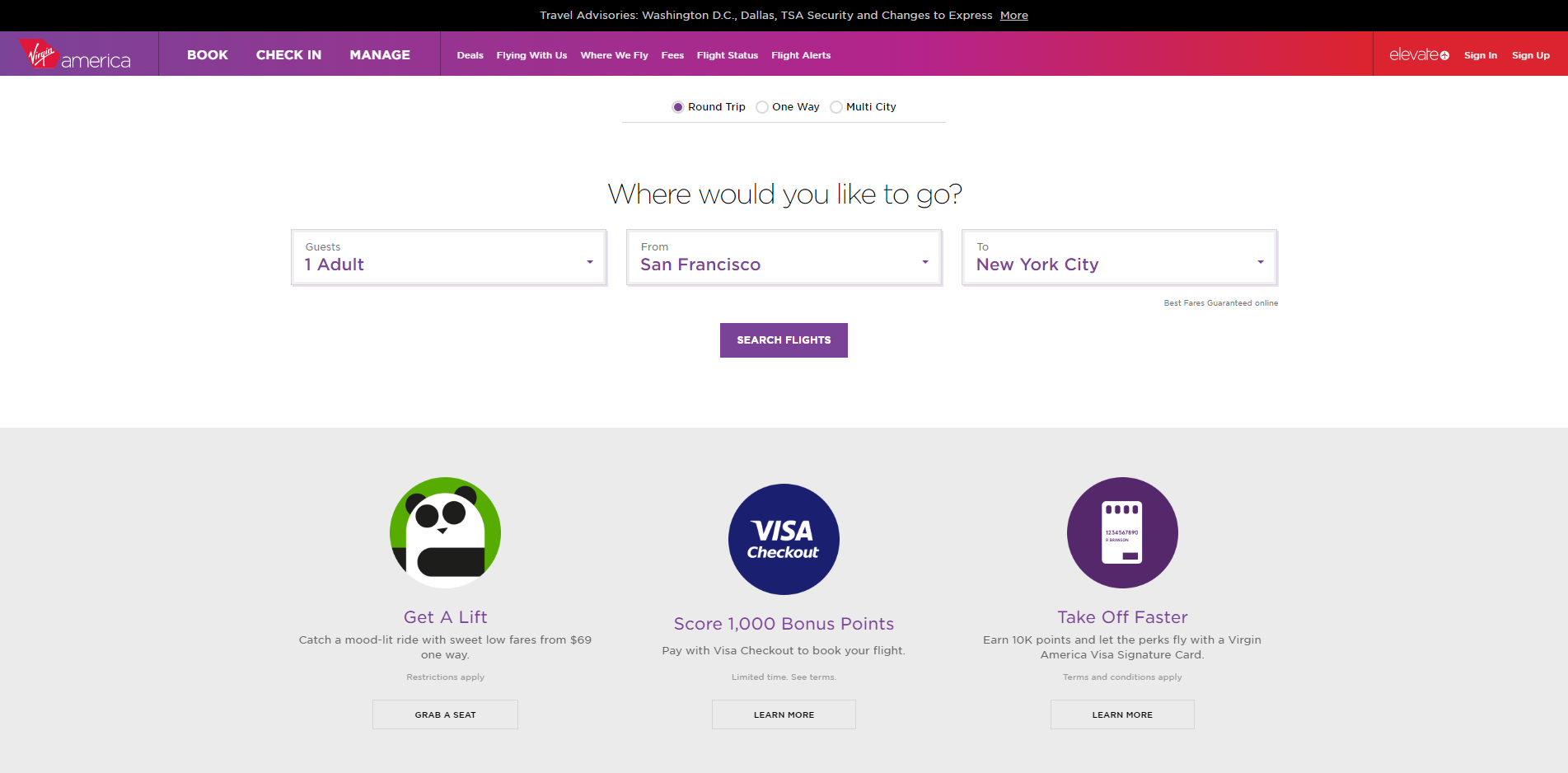

A good example would be the new Virgin America travel booking site. The revamped website now allows customers to book their flights nearly as twice as fast compared to the previous website.

In their newly redesigned website, they have removed all the usual vacation ads, credit card promos, and car rental offers.

Virgin America now gives a brand new online experience for customers with their clean and responsive website.

The good news is that there are plenty of ways to optimize a website for speed, and most of them don’t take a lot of time or money to implement. Even if they did, the rewards would still be well worth the effort, given what an issue this is becoming. A smooth, quickly-loading page will greatly increase your appeal.

We’ll go ahead and skip over some of the obvious suggestions, such as utilizing smaller images or not deploying auto-playing audio or video. Most of these are “back end” solutions, which will do far more good in the long run.

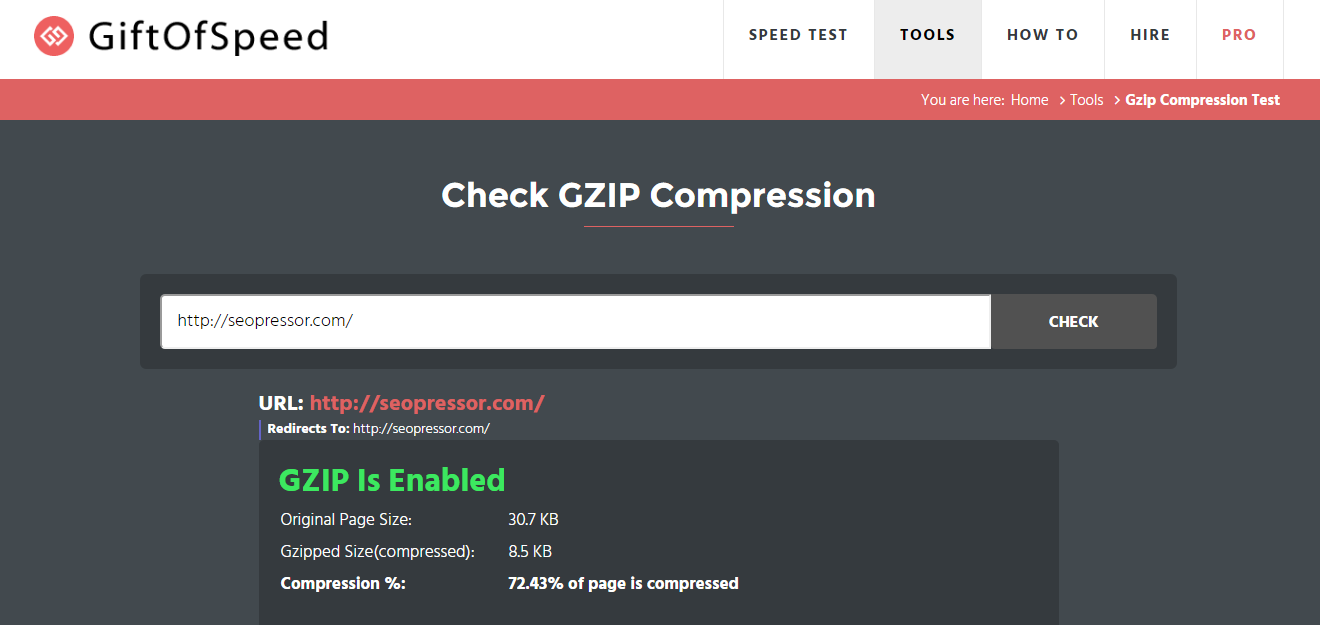

This is a great and sorely under-utilized feature which nearly all major servers and web hosts support (even WordPress) and which is likewise supported on major user-side browsers.

Simply put, your server/host will automatically provide Gzip-compressed files to users, which are generally around 30% of the size of the uncompressed files. These are then seamlessly decoded by browsers. The minuscule amount of extra time spent decompressing the files is far outweighed by the data savings.

If you’re uncertain whether this is already enabled, go here for a quick test of your website. They also have an excellent guide to enabling Gzip on most major platforms.

By enabling Gzip compression, your visitors will get to download smaller files when browsing your site.

This is especially effective for e-commerce sites that may have hundreds, or even thousands, of individual pages with their associated graphics. The links are almost certainly being stored in a central database, but as the collection of content grows, the access times for that database will grow larger to match.

Indexing your database can help to speed up the process of retrieving data as every data is arranged systematically.

Simply running an index function periodically on the database will vastly reduce the amount of time the server requires to find and return the proper items. This can easily take a page that requires 5-10 seconds to load down to 1 second or less.

Do you have a website that uses a ton of images that are high-resolution or high-detail and can’t be compressed much without wrecking them? Lazy loading may be the answer.

Simply put, this means that the web page doesn’t pre-load every image, but rather waits until the user is (nearly) looking at them.

So, it doesn’t reduce data usage, but can greatly streamline initial load times.

There are a couple good and relatively easy-to-implement Javascripts that can handle this. We recommend either beLazy.js or Echo.js, which are both low-overhead and extremely tiny (<1.5kb).

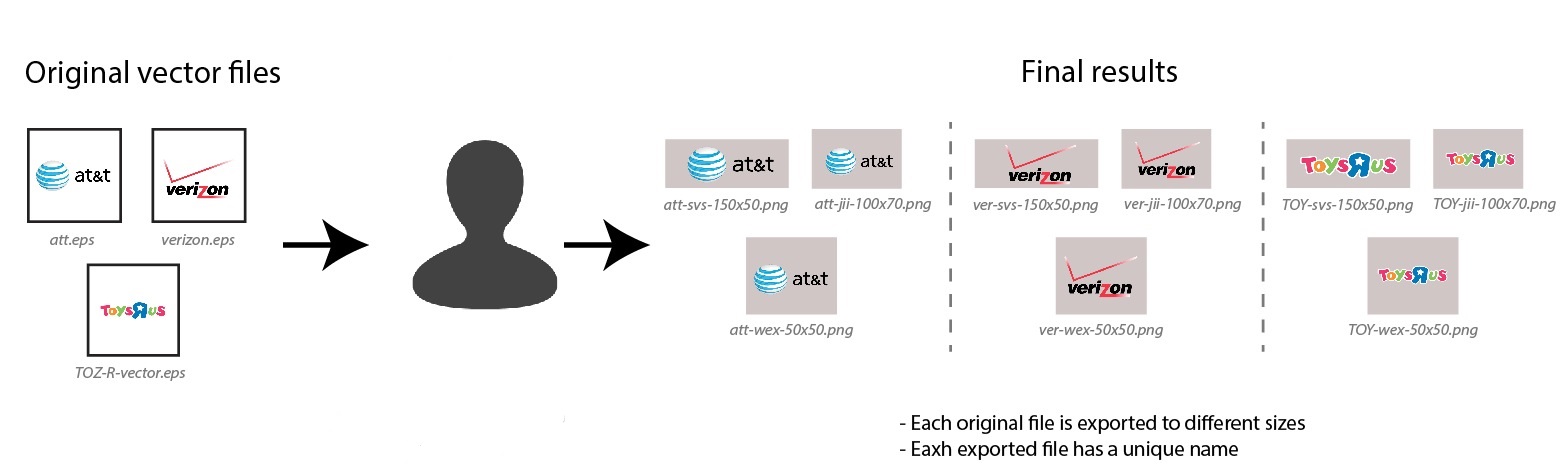

A lot of websites like to utilize one single graphic file, and then have HTML code automatically resize the image to fit the user’s page. This is easier on web designers and slightly more space-efficient for them, but it’s terrible for load times and bandwidth usage.

The entire file has to be downloaded, plus there’s then extra processing time while the web page figures out the user’s screen dimensions and scales the image to match.

This action will translate to faster loading and lower bandwidth use.

It takes a little more work and space at the back end, but having several versions of the same file at different pre-set resolutions will greatly reduce the time and effort taken on the user’s side.

Social sharing embeds can cause additional problem such as increasing memory overhead for the page.

Yes, we can hear your data team’s heads popping already. However, the simple fact is that every tracking code and every social embed that rely on exterior websites/servers will significantly increase load times. This can be an issue on older browsers and lower-end phones, causing more slowdown.

If you’re using multiple trackers, talk about consolidating it down to a single tracker. And as far as social sharing goes… Sure, it’s nice to have a dozen different buttons, but how many people are actually clicking on them?

Focus on 2-4 social networks where you have the most shares, rather than hoping for that one stray Tumblr user to come along. The speed hit isn’t worth a tiny handful of clicks.

Browser caching is nearly as old as the World Wide Web, but until recently, functionality was limited. A page could store some common graphic elements on the local browser to reduce re-accessing the content, but that was about it.

However, HTML5 greatly expands local caching options. Local Storage allows you to store huge chunks of the website once they’re downloaded, streamlining browsing.

For more advanced pages, look into Application Caching. This allows fully-functional web apps that work remotely/offline from the user’s browser cache, without constant updates from your server.

If there’s a clock running every time a user clicks on a link on your website, a redirect, even a 301 resets that clock. This is another case where sacrificing a little bit of SEO/clickthrough juice is justified for the sake of a speedup.

Use as many direct links as you possibly can, rather than forcing browsers to slog through multiple redirections that only slow down the user experience.

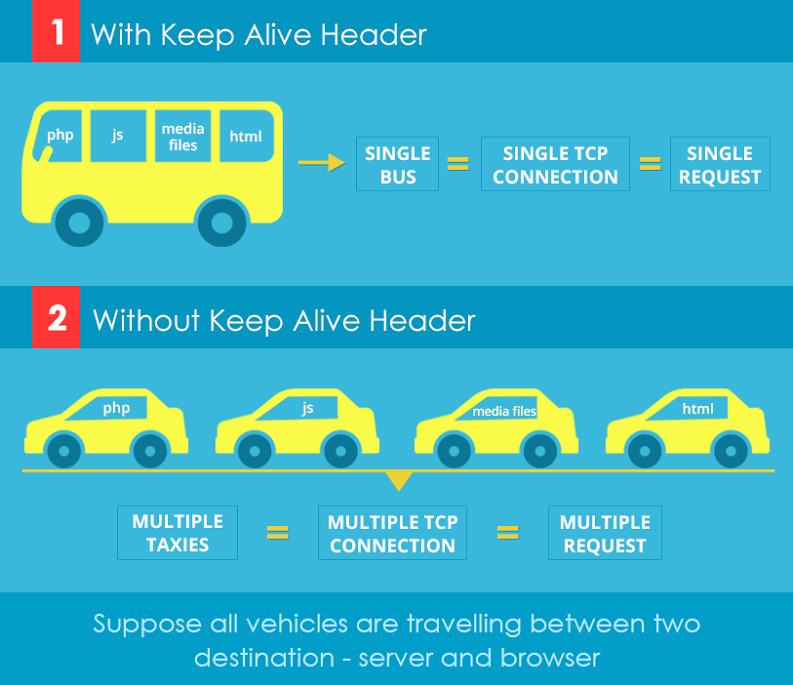

Simply put, Keep-Alive functions mean that the web page establishes a single, permanent TCP/HTTP connection which lasts for the duration of the web page transfer. Without Keep-Alive, it’s constantly dropping and re-authorizing the connection for every file, drastically driving upload times and processor overhead.

By having keep-alive, it will reduce the loading time and utilizes multiple resources on the server.

Most servers now have Keep-Alive enabled by default, but that’s not guaranteed. Some penny-pinching web hosts will have it disabled by default, since it saves them a tiny bit of money. Check to see if you have Keep-Alive enabled, and turn it on if it isn’t.

If you’re stuck for other ideas, Google may be able to help. In keeping with their focus on speed and usability, they now have a free PageSpeed Insights tool. This analyzes pages and points out specific bottlenecks and other problem areas, then offers suggestions for fixing them.

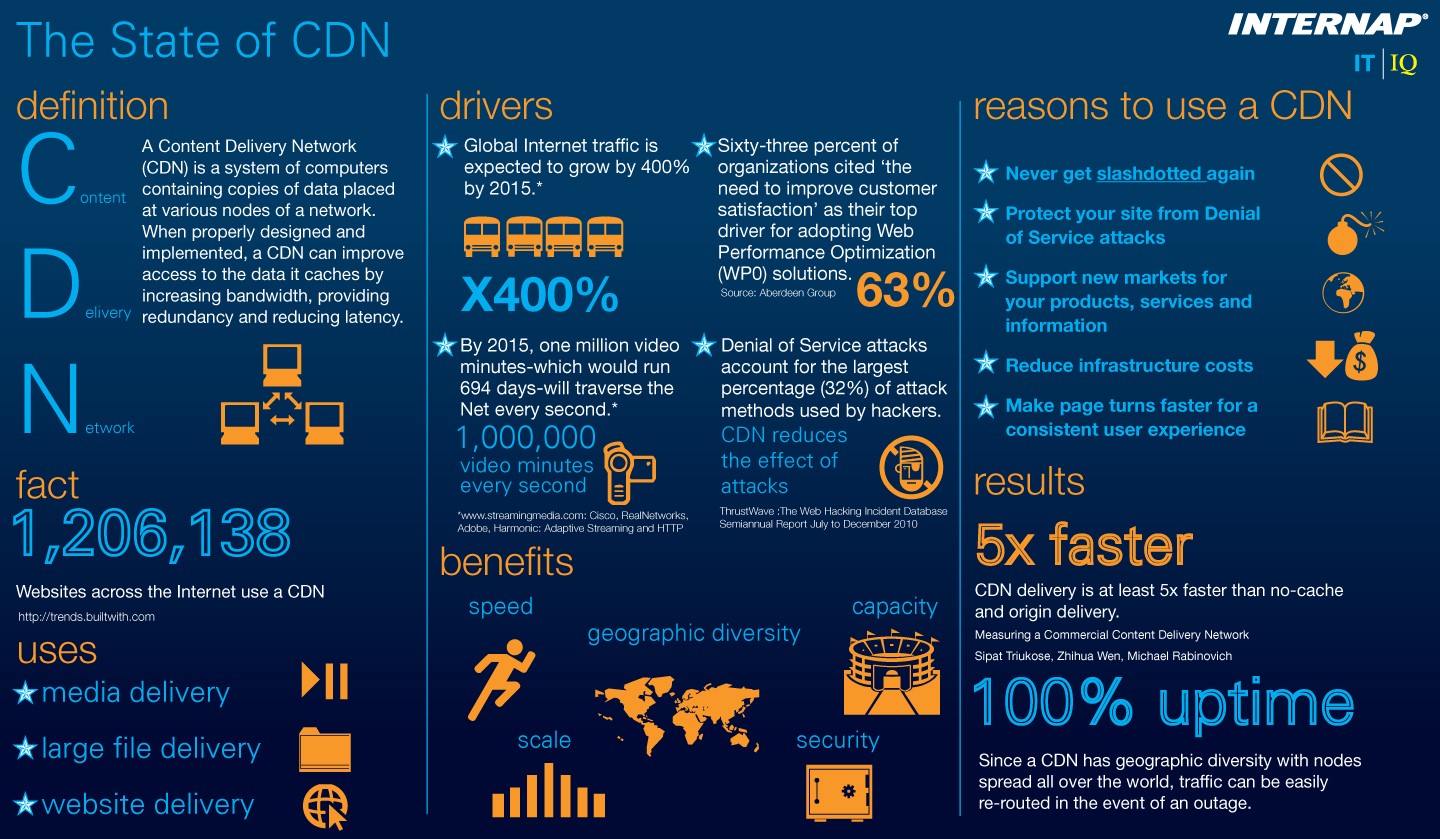

They can be pricey to implement, so this may not be an option for everyone. However, Content Distribution Networks (CDNs) such as Amazon Cloudfront utilize cloud-based systems to cache websites and then dynamically serve them up to users from geographically-targeted servers.

CDNs can create a true night-and-day difference in load times, especially if your own servers are struggling to keep up with demand. Plus, they also add considerable reliability to your webpage as well, making outages far less frequent.

Users prefer website that loads faster.

If there’s a key takeaway here, it’s merely this: Virtually anything you can do which speeds up download times while also (hopefully) reducing data usage will be worth the tradeoffs made. It’s too easy for a slow-loading site to lose viewers, or even lose search engine placement, to make it worth having a few extra bells and whistles. /a>

Don’t let webpage bloat slow you down. Be smart about the features you implement, and remember to prioritize UX above nearly any other factor. Before you go, here’s a detailed post on the connection between site speed and SEO for you to read: https://seopressor.com/blog/connection-between-site-speed-and-seo-today/

Are there any other methods that you’ve tried to boost your page speed? Do share it with us by leaving a comment down below!

Updated: 26 February 2026

Save thousands of dollars (it’s 100x cheaper)

Zero risk of Google penalty (it’s Google-approved)

Boost your rankings (proven by case studies)

Rank High With This Link Strategy

Precise, Simplified, Fast Internal Linking.